Navigating Legal, Moral, and Practical Challenges

Introduction

In the digital age, social media platforms have become the new public squares where millions of people worldwide share their ideas, opinions, and experiences. What began as a means to connect friends and family has evolved into powerful tools that can influence elections, amplify social movements, and even contribute to the shaping of policy. With this growing influence comes greater responsibility, a question that increasingly lies at the heart of debates about the role of technology in our society.

A common question arises: If you were to host a paramilitary group in your home to discuss their activities, you could be held accountable for what happens there. But if the same group discusses their plans on a social media platform, are the CEOs of these platforms not responsible for what is said on their sites? This question highlights a perceived double standard and raises critical legal, ethical, and practical issues.

This blog will explore the many facets of responsibility on social media, including the comparison between individual responsibility in physical spaces and the responsibility of social media companies in the digital realm. We will also discuss the growing public pressure that forces platforms to adjust their policies, as well as possible solutions to make them more accountable for the content their users share.

§

1. Legal Aspects of Responsibility on Social Media

The legal position of social media platforms differs significantly from that of individuals or traditional media. In many countries, these companies enjoy legal protections that shield them from liability for the content their users post. This was originally intended to promote innovation and free speech, but over the years, it has sparked debate over whether these protections are still appropriate given the immense influence these platforms now wield.

Consider this: If a paramilitary group were to gather in your home and discuss illegal activities, you might be held accountable under the law. This accountability stems from your control over the space and what happens within it. By contrast, social media platforms like Facebook or Twitter, which host billions of users discussing all manner of topics, often enjoy legal protections such as Section 230 of the Communications Decency Act in the United States. This law provides these platforms with immunity from liability for user-generated content, as long as they make reasonable efforts to remove illegal content.

However, this legal shield has been increasingly criticized. Critics argue that social media companies, much like an individual who hosts a gathering in their home, should be held accountable for what is discussed and shared on their platforms, especially when it involves harmful or illegal activities. Recent legislative changes, such as the European Union’s Digital Services Act, seek to address this by imposing greater responsibilities on platforms to monitor and remove illegal content.

The question of whether platforms should be treated more like publishers, who are legally liable for the content they distribute, remains a contentious issue. While the scale of social media platforms complicates this comparison, the fundamental issue of responsibility—whether in a physical space like a home or a digital platform—remains at the heart of the debate.

§

2. Moral Responsibility of Social Media Platforms

Beyond legal obligations, there is a widely recognized belief that social media platforms bear a significant moral responsibility for what happens on their sites. This responsibility stems from the fact that platforms like Facebook, Twitter, and YouTube have become the de facto public squares where much of the global discourse takes place. With this immense power comes an equally significant duty to ensure that these spaces are not misused to cause harm, spread hate, or destabilize communities.

2.1 The Analogy of Hosting in Your Home

To understand this moral responsibility, consider the analogy of hosting a paramilitary group in your home. Even if the law does not hold you directly accountable for the group’s actions, many would argue that you bear a moral responsibility for allowing such a gathering under your roof. By providing the space, you are implicitly endorsing or at least enabling their discussion and potential actions. Similarly, social media platforms, despite being legally protected in many cases, face moral scrutiny for the content they allow to proliferate on their sites. The reach and influence of these platforms mean that their decisions—or lack thereof—can have far-reaching consequences.

2.2 Failures in Moral Responsibility

There have been numerous instances where social media platforms have failed to live up to this moral responsibility, allowing harmful content to spread unchecked. This failure has led to significant real-world consequences:

- Violence: In some cases, content that incites violence or hatred has led to real-world attacks and conflicts. The Christchurch mosque shootings, which were live-streamed on Facebook, are a tragic example of how failure to moderate harmful content can have deadly consequences.

- Misinformation: The spread of misinformation, particularly during crises such as the COVID-19 pandemic, has had severe impacts on public health and safety. False claims about the virus, vaccines, and treatments have led to vaccine hesitancy, the promotion of dangerous “cures,” and a general erosion of trust in public health institutions.

- Erosion of Public Trust: The unchecked spread of harmful content has also contributed to a broader erosion of public trust in social media platforms. Users increasingly question the integrity and motivations of these companies, leading to calls for greater regulation and accountability.

2.3 Upholding Moral Duty

Conversely, when social media platforms take decisive action—such as banning accounts that promote hate speech or violence—they are often seen as upholding their moral duty to protect users and society at large. Examples include Twitter’s decision to ban former U.S. President Donald Trump after the January 6th Capitol insurrection and Facebook’s efforts to crack down on misinformation related to COVID-19.

However, these actions often come with their own set of challenges and controversies. The balancing act between protecting free speech and preventing harm is delicate, and platforms are frequently criticized regardless of the stance they take. Nevertheless, the moral imperative to act in the face of potential harm remains strong.

2.4 The Complexity of Moral Responsibility

Moral responsibility is inherently complex and often subjective, influenced by cultural, political, and social factors. What one community or society views as morally unacceptable may be seen as permissible or even necessary in another. This complexity is further exacerbated by the global nature of social media platforms, which must navigate differing legal standards and societal norms across various regions.

For example, content that is considered hate speech and banned in Europe might be protected under the First Amendment in the United States. Similarly, political speech that is censored in one country might be freely available in another. Social media companies must navigate these differences while striving to uphold a consistent set of ethical standards.

2.5 Ethical Choices and Corporate Practices

Just as individuals are expected to make ethical choices in their personal lives, social media companies are increasingly expected to consider the ethical implications of their policies and practices. This involves making difficult decisions about what content to allow, how to moderate discussions, and how to respond to harmful behavior on their platforms.

- Proactive Measures: Companies can take proactive measures to prevent harm, such as implementing stronger content moderation practices, using AI to detect and remove harmful content, and providing users with tools to report abuse.

- Transparency and Accountability: Platforms can enhance their moral standing by being transparent about their decision-making processes and holding themselves accountable for their actions. This includes publishing regular transparency reports, engaging with users and stakeholders, and being open to external audits and reviews.

- Corporate Social Responsibility: Beyond content moderation, social media companies can engage in broader corporate social responsibility initiatives, such as supporting digital literacy programs, combating online harassment, and promoting positive social causes.

2.6 The Moral Imperative

Social media platforms wield significant influence over global discourse, and with that influence comes a profound moral responsibility. While legal protections may shield these companies from certain liabilities, they cannot escape the ethical obligations they have to their users and to society as a whole. By recognizing and acting upon this moral responsibility, social media platforms can help create a safer, more inclusive, and more equitable online environment. This requires ongoing reflection, proactive measures, and a commitment to prioritizing the well-being of users over profits.

§

3. Public Pressure and Reputation Management

In addition to their legal and moral responsibilities, social media platforms are increasingly aware of the crucial role public perception plays in their success. In an era where users, advertisers, and broader society are acutely aware of the impact these platforms have on public discourse and behavior, companies must navigate carefully to protect their brand and retain their user base. The power of public pressure cannot be underestimated, as it often drives platforms to take action, even in the absence of direct legal requirements.

3.1 The Influence of Public Opinion

Public opinion can hold individuals and companies accountable in powerful ways. For instance, just as an individual might face social backlash for hosting a controversial group in their home, social media platforms are subject to the court of public opinion. Their reputation can suffer significantly if they are perceived as allowing harmful content to proliferate unchecked. This reputational risk frequently compels platforms to take action, especially when they face widespread public scrutiny.

One of the most prominent examples of public pressure influencing a social media platform is the fallout from the Cambridge Analytica scandal. In 2018, it was revealed that Cambridge Analytica had improperly accessed the personal data of millions of Facebook users without their consent, using this data to influence political campaigns. The public outcry was swift and intense, leading to a significant reputational hit for Facebook. In response, Facebook had to overhaul its privacy policies, increase transparency, and implement stricter controls to regain user trust. This scenario illustrates how public pressure can force platforms to take responsibility, even if they are not legally compelled to do so.

3.2 The Financial Impact of Public Pressure

The reputational risks associated with public opinion also translate into financial risks. The “Stop Hate for Profit” campaign is a prime example of how public pressure can lead to tangible financial consequences for social media platforms. This campaign, which emerged in 2020, called on major advertisers to boycott Facebook in protest of the platform’s handling of hate speech and misinformation. The campaign gained significant traction, with hundreds of companies pulling their ads from Facebook, including major brands like Coca-Cola, Unilever, and Verizon.

This advertiser boycott not only highlighted the power of public opinion but also demonstrated the financial impact that can result from reputational damage. Faced with the loss of advertising revenue, Facebook was forced to reassess its content moderation policies and take steps to address the concerns raised by the campaign. This included stricter enforcement of community standards, increased efforts to combat hate speech, and greater transparency about its content moderation practices.

3.3 Reputation Management Strategies

To navigate the challenges of public pressure, social media platforms have increasingly adopted strategies to manage their reputation and mitigate risks. These strategies include:

- Proactive Communication: Platforms must maintain open lines of communication with their users, stakeholders, and the broader public. By proactively addressing issues, providing timely updates, and being transparent about their practices, platforms can build trust and demonstrate their commitment to responsible behavior.

- Responsive Action: When a crisis arises, such as a data breach or the spread of harmful content, platforms need to respond quickly and effectively. This includes taking immediate action to address the problem, issuing public apologies if necessary, and outlining the steps they will take to prevent similar issues in the future.

- Corporate Social Responsibility (CSR): Platforms can strengthen their reputation by engaging in CSR initiatives that align with their values and the expectations of their users. This could involve supporting digital literacy programs, partnering with organizations to combat online harassment, or promoting social causes that resonate with their user base.

- Regular Transparency Reports: To build and maintain trust, platforms should regularly publish transparency reports that detail their content moderation efforts, data privacy practices, and responses to government requests. These reports help to hold platforms accountable and provide the public with insight into how they are managing their responsibilities.

3.4 The Long-Term Implications of Public Pressure

Public pressure is not just a short-term concern for social media platforms; it has long-term implications for their sustainability and success. Companies that fail to adequately address public concerns risk losing not only users and advertisers but also their social license to operate. As public awareness of the impact of social media grows, platforms will increasingly be held accountable for the content they host and the behaviors they enable.

Moreover, public pressure can drive regulatory changes. When platforms are perceived as failing to manage their responsibilities, governments may step in to impose stricter regulations, as seen with the European Union’s General Data Protection Regulation (GDPR) and the Digital Services Act. These regulations often reflect the demands of the public for greater accountability and transparency in how platforms operate.

3.5 The Power of Public Pressure

Public pressure is a powerful force that can shape the behavior and policies of social media platforms. While legal and moral responsibilities provide a framework for how these platforms should operate, it is often the court of public opinion that drives immediate and tangible changes. Social media companies must therefore be vigilant in managing their reputation, as the consequences of failing to do so can be both financially damaging and detrimental to their long-term success.

By proactively engaging with their users, responding to crises with transparency and accountability, and aligning their practices with the values of their communities, social media platforms can navigate the complex landscape of public pressure and maintain their position as trusted and responsible entities in the digital world.

§

4. Practical Challenges: Scale and Moderation

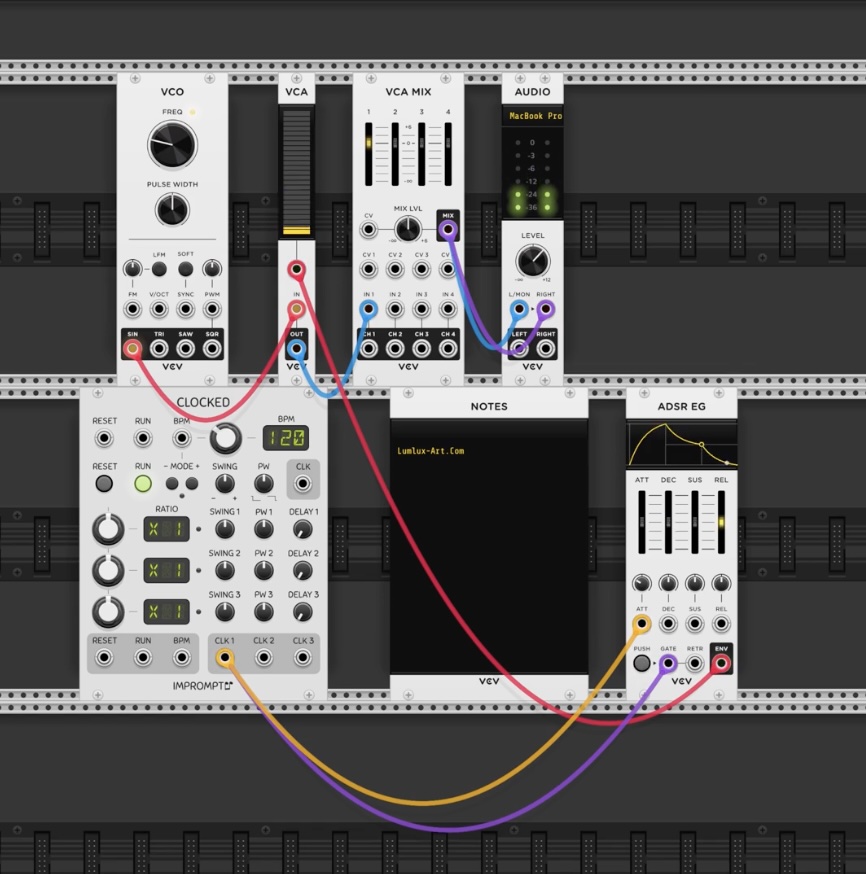

One of the biggest challenges social media platforms face is the sheer scale at which they operate. Billions of users post content daily in dozens of languages and contexts, making content moderation a monumental task. This raises the question: how can a platform effectively take responsibility for content on such a large scale?

Technologically, platforms use artificial intelligence and machine learning to help moderate content. However, these tools have limitations, particularly in understanding context and cultural nuances. While a homeowner might be able to control who enters their home and what is discussed, a platform like Facebook or Twitter cannot as easily monitor every conversation or post.

Human moderators are essential for understanding context and making nuanced decisions, but they face their own set of challenges, including the psychological toll of constant exposure to harmful content. The scale of content on social media platforms is so vast that it is impossible to review everything manually, leading to inevitable gaps in enforcement.

This comparison between individual responsibility and the responsibilities of large platforms highlights the practical difficulties in applying the same standards across vastly different contexts. While a homeowner can be held accountable for what happens in a controlled environment, the global and open nature of social media presents unique challenges that complicate direct comparisons.

§

5. Balancing Free Speech and Censorship

One of the most challenging issues social media platforms face is finding the right balance between protecting free speech and regulating harmful content. In many countries, free speech is considered a fundamental right, enshrined in constitutions and legal frameworks as a cornerstone of democratic society. Social media platforms, as modern public forums, are often seen as essential spaces where this freedom must be preserved. However, these platforms also have a responsibility to protect their users from harmful content, including hate speech, misinformation, and incitement to violence. This dual responsibility creates a complex and often controversial dilemma.

5.1 Defining the Boundaries: Free Speech vs. Harmful Content

The debate over free speech on social media becomes particularly contentious when it involves content that is potentially harmful. While platforms strive to protect freedom of expression, they also face the challenge of mitigating the spread of content that could incite violence, promote hate, or destabilize societies.

For example, hate speech, incitement to violence, and calls for civil war are not just morally reprehensible; in many jurisdictions, they are also illegal. Laws against hate crimes, incitement, and racism exist to prevent such speech from leading to real-world harm. In these cases, the protection of free speech must be weighed against the need to prevent violence and uphold public safety.

5.2 Legal Frameworks and Their Application

Different countries have varying legal frameworks for dealing with hate speech and incitement. For instance:

- Hate Speech: In many Western democracies, hate speech laws are designed to prevent expressions that incite hatred against individuals or groups based on attributes such as race, religion, ethnicity, or sexual orientation. While these laws vary in scope and enforcement, they often limit speech that could lead to discrimination, violence, or social unrest.

- Incitement to Violence: Most legal systems criminalize speech that directly incites others to commit violence. This includes speech that encourages violent actions against individuals, groups, or the state. Social media platforms are increasingly expected to monitor and remove content that crosses this legal boundary.

- Incitement to Civil War: Calls for insurrection or civil war are particularly egregious forms of incitement that can threaten national security. Such speech is not protected under free speech laws in most jurisdictions and is subject to criminal prosecution. For instance, when high-profile individuals, including social media influencers or political figures, make statements that could be interpreted as inciting civil unrest, it raises serious legal and ethical concerns.

5.3 Elon Musk and the Boundaries of Free Speech

Recently, Elon Musk, the owner of Twitter (now rebranded as X), made a statement alluding to the possibility of a civil war in England. Such remarks, especially when made by influential figures, can have far-reaching consequences. While Musk may argue that his statement falls under the protection of free speech, it also highlights the fine line between expressing a controversial opinion and potentially inciting unrest.

The laws in many countries, including the UK, have provisions against incitement to violence and promoting civil disorder. If such statements are perceived as a serious threat to public order, they could be subject to legal scrutiny. This illustrates the challenge social media platforms face in moderating content from influential users, who may push the boundaries of free speech in ways that have significant societal impacts.

5.4 Moderation Policies and the Risk of Censorship

Social media platforms often find themselves caught between the need to prevent harmful content and the risk of being accused of censorship. When platforms remove content that is deemed harmful, they may face backlash from users who believe their right to free speech is being infringed upon. On the other hand, if platforms allow harmful content to remain, they can be criticized for enabling the spread of dangerous ideologies or failing to protect vulnerable communities.

For instance, when platforms take down posts that contain hate speech or incitement to violence, some users may argue that their content was merely provocative or controversial but not illegal. This raises important questions about where to draw the line. Is it better to err on the side of caution and remove potentially harmful content, even at the risk of over-censorship? Or should platforms be more lenient, allowing a broader range of speech but risking the spread of harmful ideologies?

5.5 The Role of Laws and Platform Policies

To navigate these challenges, platforms must work within the framework of existing laws while also developing their own policies to address harmful content. These policies often involve:

- Content Guidelines: Clear and transparent guidelines outlining what constitutes hate speech, incitement, and other forms of harmful content. These guidelines help users understand the boundaries of acceptable speech on the platform.

- AI and Human Moderation: A combination of artificial intelligence and human moderators to identify and remove content that violates these guidelines. While AI can help scale moderation efforts, human oversight is crucial for context-sensitive decisions.

- Appeals Processes: Mechanisms for users to appeal content removals or account suspensions. This ensures that moderation decisions are not final and can be reviewed for fairness and accuracy.

- Transparency Reports: Regular reports detailing the types of content removed and the reasons behind those decisions. Transparency helps build trust and accountability, reducing accusations of arbitrary censorship.

5.6 The Need for Ongoing Dialogue

The solution to balancing free speech and censorship is not simple and requires constant adjustments to policy, transparency in moderation decisions, and dialogue with users, legal experts, and civil society organizations. Social media platforms must continually review their role as guardians of digital discourse, especially in a world where the boundaries between online and offline are increasingly blurred.

Engaging with stakeholders, including legal experts, human rights organizations, and user communities, can help platforms navigate these complex issues. By fostering an open dialogue about the challenges of moderating content and the importance of free speech, platforms can work towards solutions that protect both individual rights and public safety.

In conclusion, while free speech is a fundamental right, it is not absolute. The responsibilities of social media platforms extend beyond merely providing a space for expression. They must also consider the potential harm that unchecked speech can cause, particularly when it involves hate speech, incitement to violence, or threats to public order. By carefully balancing these responsibilities, platforms can contribute to a safer, more inclusive online environment while respecting the essential principles of free speech.

§

6. Case Studies and Examples

To better understand the complexity of responsibility on social media platforms, it is helpful to examine some case studies and examples. These case studies illustrate how platforms have responded to controversial situations in the past and what lessons can be learned for the future.

6.1 The Cambridge Analytica Scandal: A Lack of Transparency

One of the most high-profile cases where the responsibility of a social media platform was questioned was the Cambridge Analytica scandal in 2018. It was revealed that the political consultancy firm Cambridge Analytica had gained unauthorized access to the personal data of tens of millions of Facebook users, which was then used to create targeted political ads during election campaigns.

This scandal raised serious concerns about how Facebook handled user data and its responsibility to protect that data. Public outrage was immense, leading to calls for greater transparency and stricter regulation. The incident forced Facebook to review its data protection policies and implement new measures to better safeguard user privacy. Nevertheless, this scandal remains a dark chapter in Facebook’s history, severely damaging trust in the platform.

6.2 The Ban on Donald Trump: A Question of Free Speech vs. Safety

Another example that illustrates the complexity of responsibility on social media platforms is the decision by Twitter and other platforms to ban then-U.S. President Donald Trump following the storming of the Capitol on January 6, 2021. This decision was unprecedented and sparked a global debate about free speech, the power of tech companies, and their responsibility to prevent violence and hate.

Critics of the ban argued that it set a dangerous precedent where private companies have the power to restrict the free speech of political leaders. Others praised the decision as a necessary step to prevent further escalation of violence. This case illustrates the delicate balance social media platforms must strike between protecting public safety and ensuring freedom of speech.

6.3 The Role of Social Media in the Arab Spring: A Double-Edged Sword

The Arab Spring, a series of anti-government protests that spread across the Middle East and North Africa in 2010-2011, is often cited as an example of how social media can be a positive force for change. Platforms like Facebook and Twitter played a crucial role in mobilizing protesters, sharing information, and circumventing state censorship.

However, the Arab Spring also highlighted the darker side of social media. While the platforms gave a voice to oppressed populations, they were also used by governments and other stakeholders to spread misinformation, identify protesters, and coordinate repressive measures. This example underscores how social media platforms can be both a force for liberation and oppression, and how important it is for these platforms to take their responsibility seriously in complex political situations.

6.4 The Christchurch Mosque Shootings: The Role of Live Streaming

In March 2019, a terrorist attack on two mosques in Christchurch, New Zealand, was live-streamed on Facebook by the perpetrator. The horrific event, which resulted in the deaths of 51 people, was broadcast live on the platform and rapidly spread across various social media sites. The video was shared and re-uploaded countless times before platforms could effectively remove it.

This incident highlighted the significant challenges social media platforms face in moderating real-time content, particularly when it comes to live streaming. The rapid dissemination of the video raised questions about the adequacy of existing moderation tools and the platforms’ ability to prevent the spread of violent content.

In response, Facebook and other platforms implemented stricter policies on live streaming and increased investment in AI technologies to detect and remove harmful content more quickly. The Christchurch Call to Action, a pledge by governments and tech companies to eliminate terrorist and violent extremist content online, also emerged from this tragedy. This case underscores the urgent need for platforms to balance the benefits of live streaming with the risks of misuse.

6.5 YouTube and the Rise of Extremist Content

Over the years, YouTube has faced significant criticism for its role in spreading extremist content. The platform’s recommendation algorithm, designed to keep users engaged by suggesting videos, has been accused of promoting increasingly radical content. This phenomenon, often referred to as the “YouTube rabbit hole,” has been linked to the rise of extremist ideologies and conspiracy theories.

For example, a 2018 report by The New York Times highlighted how YouTube’s algorithm had played a role in radicalizing users by consistently recommending far-right and extremist content. This raised concerns about the responsibility of social media platforms in curating content and the unintended consequences of prioritizing engagement over safety.

In response, YouTube has made efforts to modify its algorithm, demote content that could lead users down extremist paths, and increase the visibility of authoritative sources. However, the balance between curbing harmful content and maintaining an open platform remains a contentious issue. This case study illustrates the challenges platforms face in mitigating the spread of harmful ideologies while maintaining user engagement.

6.6 Twitter and the #MeToo Movement: Amplifying Voices

The #MeToo movement, which gained momentum in 2017, is a powerful example of how social media can be used for social good. The movement, which began as a way for survivors of sexual harassment and assault to share their stories, quickly went viral on Twitter and other platforms. It became a global phenomenon, leading to widespread discussions about sexual harassment and assault in various industries.

Twitter played a crucial role in amplifying the voices of survivors and providing a platform for people to share their experiences. The hashtag #MeToo was used millions of times across the globe, sparking conversations that led to real-world changes, such as the ousting of powerful figures accused of misconduct and the implementation of stricter workplace policies.

However, the movement also faced challenges, including online harassment and the spread of false accusations. This raised questions about the role of social media platforms in facilitating movements that can have both positive and negative consequences. While Twitter’s open nature allowed the #MeToo movement to flourish, it also exposed the platform’s limitations in protecting users from backlash and misinformation.

This case highlights the dual-edged nature of social media platforms: they can empower marginalized voices and drive social change, but they also pose challenges in managing the consequences of widespread, unfiltered discourse.

6.7 Facebook and the Spread of Misinformation during the COVID-19 Pandemic

6.7.1 The Surge of Misinformation

During the COVID-19 pandemic, social media platforms, particularly Facebook, were inundated with misinformation about the virus, its origins, and potential treatments. As the pandemic spread globally, so did false claims about supposed cures, the safety and efficacy of vaccines, and various conspiracy theories. This wave of misinformation had tangible, real-world consequences, including increased vaccine hesitancy and the adoption of dangerous health practices by individuals misled by these false narratives.

6.7.2 Facebook’s Response to Misinformation

Facing immense pressure from governments, public health authorities, and the general public, Facebook had to act quickly to address the proliferation of misinformation on its platform. The company initiated several key measures, including:

- Fact-Checking Partnerships: Facebook expanded its partnerships with third-party fact-checkers to help identify and label false information related to COVID-19. These partners played a critical role in assessing the veracity of content being shared on the platform.

- Content Flagging and Removal: To curb the spread of harmful misinformation, Facebook began flagging content that contained debunked claims. Additionally, the platform removed content that violated its policies, particularly if it posed a direct threat to public health.

- Promoting Reliable Information: Facebook also took steps to promote reliable information by directing users to authoritative sources, such as the World Health Organization (WHO) and the Centers for Disease Control and Prevention (CDC), whenever they searched for COVID-19-related topics.

6.7.3 Ongoing Challenges and Criticisms

Despite these efforts, the spread of misinformation on Facebook continued to be a significant issue. The platform struggled to keep up with the vast amount of content being generated and shared daily. Additionally, Facebook’s algorithms, which are designed to prioritize engaging content, often inadvertently amplified sensational and misleading posts, making it difficult to control the flow of misinformation.

Critics argued that Facebook’s response was insufficient, pointing out that the platform’s measures were often reactive rather than proactive. Moreover, there were concerns about the effectiveness of fact-checking, as flagged content often remained visible to users or was reshared with disclaimers that were ignored by those determined to spread falsehoods.

6.7.4 Balancing Free Speech and Public Safety

The spread of COVID-19 misinformation on Facebook highlights the broader challenges that social media companies face in balancing free speech with the responsibility to prevent the dissemination of harmful misinformation. While it is crucial to allow open discourse and debate, the pandemic underscored the potential dangers when false information goes unchecked, particularly in a public health crisis.

This situation sparked ongoing debates about the role of social media platforms in safeguarding public health. Questions arose about the extent to which these platforms should intervene in user-generated content and where to draw the line between moderating harmful content and respecting freedom of expression.

6.7.5 Lessons for the Future

The COVID-19 pandemic has been a significant learning experience for Facebook and other social media platforms. It has become clear that more robust mechanisms are needed to prevent the rapid spread of misinformation, especially during global crises. Future strategies may involve:

- Enhanced AI Moderation: Improving artificial intelligence tools to detect and mitigate misinformation before it gains traction.

- Greater Transparency: Providing more transparency around content moderation decisions to build public trust and clarify the balance between free speech and public safety.

- Stronger Community Standards: Continuously updating and enforcing community standards to reflect the evolving nature of misinformation and other harmful content.

In summary, the challenges faced by Facebook during the COVID-19 pandemic underscore the complex responsibilities of social media platforms in managing public health information. As these platforms continue to evolve, they must strike a delicate balance between enabling free expression and protecting users from the dangers of misinformation.

6.7 The Role of WhatsApp in the Spread of Violence in India

WhatsApp, a messaging service owned by Facebook, has been implicated in the spread of misinformation and violence in India. In several incidents, false rumors about child kidnappings and organ trafficking spread rapidly through WhatsApp groups, leading to mob violence and the deaths of innocent people.

The platform’s end-to-end encryption, which ensures that only the communicating users can read the messages, made it difficult for authorities to trace the origin of the rumors or for the platform to intervene effectively. The spread of misinformation via WhatsApp groups, often among small communities with limited access to other information sources, illustrated the dangers of unmoderated, encrypted communication in fueling violence.

In response, WhatsApp introduced several measures to curb the spread of misinformation, such as limiting the number of times a message could be forwarded and labeling forwarded messages to make users more aware of their origin. However, these measures have had limited success in preventing the spread of harmful rumors.

This case study highlights the challenges of moderating content in encrypted messaging services and the unintended consequences of privacy features. It also raises important questions about the balance between user privacy and the need to prevent harm in environments where misinformation can quickly lead to violence.

§

7. Future Directions and Solutions

Now that we have discussed the legal, moral, and practical challenges facing social media platforms, it is important to think about how these platforms can be made more accountable in the future. What reforms, innovations, and policy changes can ensure that platforms fulfill their role in society responsibly?

7.1 Reforming Legislation: Revisiting Section 230 and Beyond

One of the most discussed potential reforms is revising Section 230 in the United States. While Section 230 provides platforms with important protections, there is a growing consensus that this legislation needs to be adjusted to hold platforms more accountable for the content shared on their sites. Proposals range from limiting legal protections for platforms that fail to remove harmful content to requiring more transparency and accountability in their moderation practices.

In the European Union, the Digital Services Act offers a possible model for how legislation can evolve to make platforms more accountable. By requiring platforms to proactively remove illegal content and provide transparency about their moderation processes, a balance is sought between protecting users and ensuring free speech. However, the question remains how effective this legislation will be in practice and how other regions can adopt similar measures.

7.2 Innovations in Moderation: The Future of AI and Human Involvement

Another direction for the future lies in technological and procedural innovations in moderation. While AI and machine learning already play an important role in content moderation, significant improvements are still possible. Investments in better AI systems that can better understand context and provide more insight into the nuances of language and culture will be crucial for improving moderation.

Moreover, human moderation remains an indispensable part of the process. Developing support systems for moderators, such as mental health care and training in cultural sensitivity, can help alleviate the challenges they face. Additionally, platforms can experiment with more decentralized moderation models, where communities themselves are involved in enforcing rules and norms, leading to a more nuanced and contextual approach to moderation.

7.3 The Role of Users and Communities: Self-Regulation and Education

Users and communities also play an important role in the future of responsibility on social media. Platforms can offer more tools and resources to empower users to report, moderate, and even regulate content within their own networks. This could foster a more decentralized and democratic approach to moderation, with users actively participating in maintaining community norms.

Education also plays a crucial role. Raising awareness among users about the impact of their online behavior, promoting digital literacy, and providing resources to critically engage with online information can contribute to a healthier and more responsible online ecosystem.

7.4 Collaboration with Governments and NGOs: Toward a Holistic Approach

Finally, collaboration between social media platforms, governments, and non-governmental organizations (NGOs) is essential for promoting responsibility. Platforms can work with governments to ensure that their policies align with legislation and societal expectations. NGOs can play a key role in monitoring the impact of social media on human rights and promoting responsible practices.

This collaboration, however, must be based on a balanced approach that protects free speech while recognizing the need to regulate harmful content. Transparency and accountability must be central to these partnerships so that platforms are not only accountable to governments but also to the broader public they serve.

§

The responsibility of social media platforms is a complex and multifaceted issue that touches on legal, moral, practical, and social concerns. As platforms like Facebook, Twitter, and YouTube increasingly form the backbone of global discourse, they must also take responsibility for the content they host and the impact they have on society.

Just as an individual might be held accountable for hosting a dangerous group in their home, social media platforms are increasingly being scrutinized for the content they allow to proliferate on their sites. Legal reforms such as revisiting Section 230, innovations in moderation technology, and greater involvement of users and communities are all important steps toward a future where social media platforms fulfill their role in a responsible manner. Moreover, public pressure will continue to grow, forcing platforms to continually evaluate and adjust their policies to maintain their reputation and the trust of their users.

The path to a responsible digital future is not easy, but by working together with governments, NGOs, and users, social media platforms can contribute to a healthier, safer, and more inclusive online environment. It is up to all of us to ensure that these platforms are not only places of free speech but also of responsibility and respect for human dignity.

§

§

??? ????????? Abstract Art Accountability Ambient Ambient Music Amsterdam Antwerp Apollo Reverb Apple Music Art Artists Austria barok Barokke Influencers Baroque Influencers Belgium Blues Bordeaux Bumble Call Me Carcassonne Classical Music Color contemporary Creativity Cubism Dance Digital Art DJs EDM Electronic Music Europe Exhibition Expressionism Facebook Fashion FDR-Sound Finance FIREmovement Food France Germany Hashtags Hinge HTTP Impressionism Inspiration Internet ISP Italy Jazz KMSKA Lifestyle London Lyon MeToo Mindfulness Music New York OkCupid Painting Paris Peter Paul Rubens Philosophy Picasso Playlist Provence Renaissance Social Media Sound Soundscape Sounnay Trilogy Spain Spotify Surrealism Tate Modern TCP IP Techno TikTok Tinder Travel UK USA V&A Venice X

Leave a Reply