If you have ever watched a stage backdrop that seemed to breathe with the music, or wandered through an exhibition where the walls changed as people moved, you have met generative visuals. These are not hand animated frame by frame. They are built as systems. You design rules, add a few controls, connect them to inputs like sound or sensors, and let the system run. Small nudges to those controls can produce completely different outcomes. That is part of the magic.

This guide walks you through what generative visuals are, how people make them with tools like TouchDesigner and Resolume, why they are so popular, and how you can build your first piece without getting lost in jargon.

A simple definition

Generative visuals are images or animations produced by a process. Instead of drawing a single look, you design a way to create many looks that belong to the same family. Think recipes, not finished dishes. You bring ingredients like shapes, noise, and color. You define relationships and rules. Then the system cooks for you, in real time or through a render.

How they differ from traditional motion work

Traditional workflows start with timelines and assets. Generative work starts with logic and parameters. With a generative system, a circle is not just a layer. It might be one member of a population that grows, rotates, scales, and changes color based on rules and inputs. You do not keyframe every detail. You build a living setup and steer it.

Real time or pre rendered

Both are valid and both are useful. Real time output is reactive and great for shows, installations, and playful exploration. Pre rendered clips are predictable, easy to review with clients, and often deliver the cleanest image quality. Many artists do both. They experiment live, then capture the best moments as loops for later use.

Core ideas you will meet often

Parameters. These are your knobs and sliders. Speed, density, hue, distortion. Good parameter design is the difference between chaos and control.

Modulation. One signal shapes another. An audio envelope can change a scale value. A slow oscillator can rotate a grid. Modulation makes visuals feel alive.

Seeds and randomness. A seed is a starting number for pseudo random functions. The same seed recreates the same variation later. Save good seeds.

Noise. Perlin or Simplex noise are smooth patterns that create organic movement and textures. They are friendly to the eye and easy to map to motion.

Instancing. The GPU can draw many copies of a thing, each with a small variation. This is perfect for swarms, grids, and particle like scenes.

Feedback. Reuse the previous frame as input for the next. You get trails, echoes, and evolving textures. It is powerful, so keep your ranges in check.

Shaders. Tiny programs run on the GPU to color pixels or move vertices. You can make great work without writing shaders, but learning a little GLSL gives you fine control and speed.

Data inputs. Audio, MIDI, OSC, cameras, sensors, CSV files. Generative systems love data. You can make visuals that respond to almost anything.

Tools and workflows that creators rely on

TouchDesigner in a few words

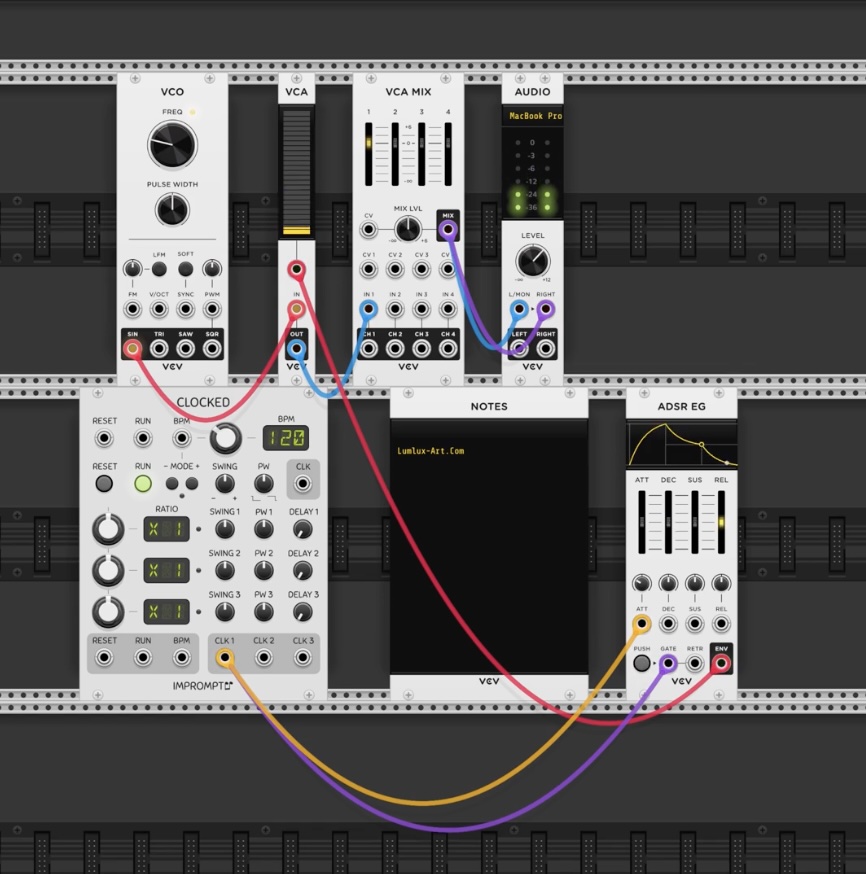

TouchDesigner is a visual programming environment that uses operators you connect together. TOPs handle textures. CHOPs handle control signals. SOPs handle geometry. DATs handle data. You can build entire systems with these blocks, then add Python or GLSL when you need deeper control.

What it is great at

- Working with inputs and outputs, from audio and MIDI to DMX and NDI

- Building custom logic that reacts to sensors or cameras

- Real time GPU work with a clear view of performance

- Packaging reusable components that you can drop into future projects

What to watch out for

It is easy to build heavy graphs. Profile early, name things clearly, and keep modules tidy. Organization matters as much as creativity.

Resolume in a few words

Resolume is a performance tool. Think decks, layers, and clips. You trigger media, mix it, apply effects, and map everything to your controllers. It is fast, it is reliable, and it is made for shows.

What it is great at

- Live playback with instant control and safe mapping

- Layering clips and FX for a polished show look

- Receiving inputs from other apps through Syphon, Spout, or NDI

- Quick mapping for LED walls and projection

What to watch out for

Complex generative logic is better authored elsewhere. Use Resolume to mix, map, and perform. Use TouchDesigner, Notch, or code for deep generation.

The popular hybrid

Many artists author looks in TouchDesigner and route them into Resolume for show control. On macOS the bridge is Syphon. On Windows it is Spout. On any network you can use NDI. This gives you custom, reactive content inside a performance tool that can handle cues, controllers, and mapping. It also gives you simple fallbacks. If your generator misbehaves, you can switch to a pre rendered loop and keep the show running.

Other routes you should know

p5.js or Processing are great for learning fundamentals and making web sketches. Notch is a node based real time tool that shines in events and branded content. VDMX is a modular performance environment on macOS. Blender Geometry Nodes gives you procedural 3D that you can render into loops. Unity and Unreal are heavy, but for interactive spaces they can be perfect.

Why the field is booming

Immersive culture. People want environments that respond. Concerts, clubs, museums, and pop ups build spaces where visuals evolve with sound and audience behavior. Generative systems are built for that. They can run for hours and still feel fresh.

Powerful yet accessible tools. GPUs are faster and cheaper than ever. Node based environments lower the barrier to entry. Inter app routing is stable. You can connect authoring tools and show tools in minutes.

Short form video. Reels, Shorts, and TikTok reward surprising movement and quick variation. Generative loops are perfect fuel. You can post often, explore styles, and let the audience tell you what resonates.

Experiential branding. Brands no longer want static visuals only. They want identities that move and adapt. Generative systems can create coherent variation across screens, events, and campaigns.

Live interactivity. When visuals react to audio, motion, or touch, the experience feels alive. That feeling is memorable, and it brings people back.

Build your first generative visual

You can start simple and still get something beautiful. Here is a compact path that works in many tools.

Pick a use case

Decide where your piece will live. If you want a VJ loop, aim for a seamless 10 to 30 second clip at 1920 by 1080 or square 1080. If you plan to perform live, target the venue resolution and aim for a steady 60 frames per second. If this is for social media, consider 9 by 16 and design motion that reads in under ten seconds.

Set up your environment

In TouchDesigner, create a TOP chain for visuals and a CHOP lane for control. Add Audio Device In if you will react to music. Wrap things in a Base component and expose a few custom parameters, for example Seed, Speed, Distort, Hue.

In Resolume, create a Composition at your target resolution. Add a generator or a temporary loop, stack a few effects, and map a couple of controller knobs. If you plan a hybrid, prepare a Syphon, Spout, or NDI input from TouchDesigner.

Build a visual core

Start with a noise pattern that slowly shifts. Combine it with a simple shape like a circle or rectangle. Use displacement or compositing to let the shape inherit motion and texture from the noise. Add a transform for scale and rotation. Add post effects like levels, a gentle blur, edges, or a feedback loop that creates trails. Keep every block swappable so you can experiment fast.

Tip. If displacement gets messy, try a gradient map or lookup. Turning luminance into color often gives you cleaner control.

Make it reactive

For audio reactivity, analyze the signal and smooth it before mapping to parameters. Raw audio is spiky. In TouchDesigner, route Audio Device In to Analyze and then to Math to clamp and scale. Map lows to size or distortion, mids to pattern density, highs to color or edge. Keep a manual override on a knob so you can rein things in if the music changes.

For controllers, pick two to four macro knobs that do something musical and obvious. Seed, Speed, Distort, Saturation are good starters. Map one or two buttons to scene or palette changes.

Add structure

Give yourself states. Save five to ten presets that range from calm to energetic. Define three palettes, warm, cool, and complementary. Create simple scenes, for example Grid, Swirl, and Bloom, and practice transitions between them. If you can, add a tap tempo or a master speed that matches the energy of the track.

Export or perform

If you export, use a codec that fits the job. For VJ work, HAP or ProRes is common, with alpha if needed. For social, H.264 or H.265 is fine. To make loops seamless, line up start and end states or wrap the phase of your oscillators.

If you perform, route TouchDesigner into Resolume through Syphon, Spout, or NDI. Build a deck with your live feed, two safe fallback loops, a text or branding layer, and a couple of mapped effect chains. Soundcheck early, confirm controller mappings, and always keep a panic key that reveals a safe loop.

Iterate and document

Save versions as you go. Note seed values and the ranges that feel good. Take screenshots of presets. Record short clips for your reel and social posts. After a session or a show, write down what worked and what broke so you can fix it next time.

Common pitfalls and how to dodge them

- Dropped frames. Reduce resolution, cap feedback iterations, watch VRAM use.

- Overreactive audio. Always smooth and limit. Use envelopes rather than raw peaks.

- Banding on gradients. Add a little dither or noise. Avoid heavy compression.

- Aliasing on thin lines. Blur slightly before scaling or render larger and downsample.

- Visual noise without structure. Define build, peak, and release, and practice the transitions.

A quick quality check before you share

- You have three distinct presets for different energy levels.

- Macro controls feel expressive.

- Audio mapping is musical, not chaotic.

- Frame rate is stable at your target resolution.

- You have a mezzanine version for shows and a compressed version for the web, each with a clean thumbnail.

Examples and patterns to study

Look at room scale work that evolves for hours without repeating. Notice how slow phases set up peaks, and how color palettes guide mood. Study city scale canvases where legibility matters from a distance. Big shapes, slow motion, strong contrast. For hybrid shows, pay attention to how artists map inputs to just a few macro controls so the system stays stable even when the crowd or music gets wild.

If you want to dig into tools, read the TouchDesigner operator docs and forums for patterns and performance tips. Browse Resolume’s documentation for mapping and controller workflows. When you are ready to connect apps, learn the basics of Syphon, Spout, and NDI for low latency routing.

Examples & Reference Patterns

Artists & spaces to study (conceptually):

- Roomscale ecosystems: spaces where visuals flow between rooms and react to visitors pay attention to motion grammar (slow ? peak ? calm), not just color.

- Cityscale canvases: architectural displays that demand bold shapes, low frequency motion, and high legibility from a distance.

- Hybrid shows: installations that combine sound, sensors, and responsive visuals note how inputs are mapped to macro parameters for stability.

Toolchain references (what to look up as you go):

- TouchDesigner operator families (TOP/CHOP/SOP/DAT), performance monitor, and component design.

- Resolume’s deck/layer philosophy, mapping, and MIDI/OSC routing.

- Syphon/Spout/NDI basics for low latency inter app video.

Patterns you can borrow immediately:

- Immersive maze: each room = a “state” (palette + rules). Transitions trigger on proximity or audio thresholds.

- Stage three tier design: build up ? peak ? release; assign a macro to each tier for quick shifts.

- Façade readability: big blocks, slow evolutions, day/night palettes.

FAQ — Short, Practical Answers

Do I need to code?

No. Node based tools (TouchDesigner) and performance apps (Resolume) let you go far without code. GLSL/p5.js unlock advanced looks later.

What hardware matters most?

GPU first (VRAM ? 6–8 GB for 1080p multi-layer; more for 4K), then CPU cores, 16–32 GB RAM, NVMe storage. Use a stable audio interface. Bring spares/fallbacks on stage.

Real-time or pre-rendered?

Real time for interactivity and performance; pre-rendered for guaranteed fidelity. Many pros do both.

How do I avoid chaotic audio reactivity?

Smooth and clamp signals; map lows to scale/distort, mids to density, highs to color/edge. Keep a manual override knob.

Best export formats?

VJ: HAP/ProRes (alpha if needed). Social: H.264/H.265 at platform aspect (9:16/1:1). Test compression; adjust contrast/sharpen.

Common beginner mistakes?

Over-stacked effects, unbounded feedback, thin high-freq lines (aliasing), mapping too many parameters to raw audio, messy project organization.

Frame-rate tips?

Lower canvas resolution, reduce heavy FX iterations, profile bottlenecks, monitor VRAM, pre bake expensive steps.

Where does AI fit?

As a texture/style source or control signal. For live shows, check inference latency; keep non-AI fallbacks.

Licensing for client work?

Treat outputs like any motion piece; ensure rights for third-party assets. For installations: specify runtime rights and maintenance.

Path to paid gigs?

Build a tight 30–60 s reel, post short loops regularly, collaborate with local DJs/VJs, share a small free pack (with credit) to grow reach.

Mini-Glossary (Quick Reference)

Algorithm

A repeatable set of rules that produces visuals from inputs. In practice: your node graph or shader logic.

Parameter

A named control (e.g., Speed, Density, Hue) that changes behavior without rewriting the system.

Modulation

Using one signal to control another (e.g., audio amplitude ? scale of shapes). Core to making visuals feel “alive.”

Seed

An initial value for pseudo random generators. Same seed = same “random” outcome; different seed = new variation.

Noise (Perlin/Simplex)

Smooth pseudo random functions used for organic motion, textures, and flow fields—less harsh than pure randomness.

Shader (GLSL)

A small program running on the GPU that shades pixels (fragment shader) or moves vertices (vertex shader) in real time.

GPU vs. CPU

GPU excels at massively parallel pixel/vertex work (real-time rendering). CPU handles general logic, I/O, and orchestration.

Instancing

Efficiently drawing many copies of a shape/mesh with per-instance variation (position, scale, color) using the GPU.

Feedback

Re-using the previous frame as input to the next frame; creates trails, echoes, and complex emergent motion.

Framebuffer / Render Target

An off-screen image buffer where you render intermediate passes before compositing/output.

Compositing

Combining layers/images using blend modes, masks, and effects to form the final picture.

Latency

Delay between input and visual response. Critical for live shows—keep routing (Spout/Syphon/NDI) and FX chains efficient.

MIDI / OSC

Protocols for real-time control. MIDI is hardware-centric (notes/CC), OSC is network-friendly and flexible (addresses/values).

NDI / Spout / Syphon

Ways to route video between apps/machines: NDI over network; Spout (Windows) and Syphon (macOS) for local GPU-texture sharing.

Color Space & Gamma

How colors are encoded (sRGB, Rec.709, etc.). Mismatches cause washed blacks or oversaturated looks—calibrate for venue media.

Aliasing

Shimmering/jagged edges from under-sampling fine detail. Fix with slight blur, super-sampling, or thicker lines.

BPM / Tempo Mapping

Linking visual rates to musical tempo—either exact (clock/tap-tempo) or approximate (envelope-driven) for musical coherence.

Tiling / Repeat

Duplicating a frame across a grid to build patterns; mind seams and aspect ratio to avoid visible boundaries.

Profile (Performance Profiling)

Measuring GPU/CPU time per operator/effect to find bottlenecks and keep frame rate stable.

Mezzanine Codec (HAP/ProRes)

High-quality, performance-friendly formats for stage playback and further processing before delivery/compression.

Final thoughts

Generative visuals reward curiosity. You design a system, then learn from what it does. The more you iterate, the more you discover small controls that produce big shifts, and the more your style emerges. Start with a simple chain, make three presets, record a loop, and share it. Then build the next one.

Leave a Reply